Defy Reality™ – The 3D Commerce Blog

Categories

What is furniture visualization?

Furniture visualization includes the creation of 3D digital models of real furniture SKUs and distribution of ...

3D Cloud Furniture Shopping Trends 2024 overview

During this week's Shoptalk event, George Hanson, EVP, Chief Digital Officer at Mattress Firm, ...

What is furniture e-commerce?

Furniture e-commerce is a way to sell and buy furniture and home goods on digital platforms. It covers every part ...

What is composable commerce?

Composable commerce is a way of building flexible online shopping experiences by combining separate software ...

What is visual merchandising?

Visual merchandising is a way of presenting products in stores to attract more shoppers and grow sales. Displays ...

Discover the impact of 3D applications on lead qualification and resource optimization in the evolving retail landscape.

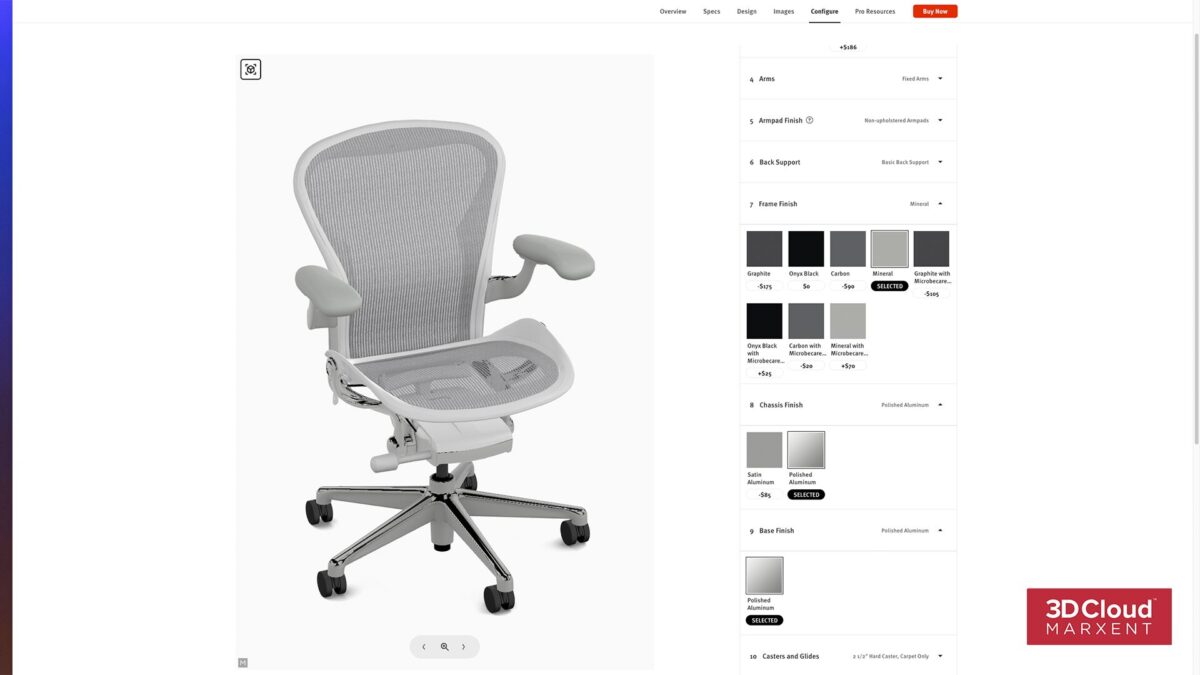

What Is a Product Configurator?A product configurator is a tool that lets customers choose how they want a product to look or function. It ...

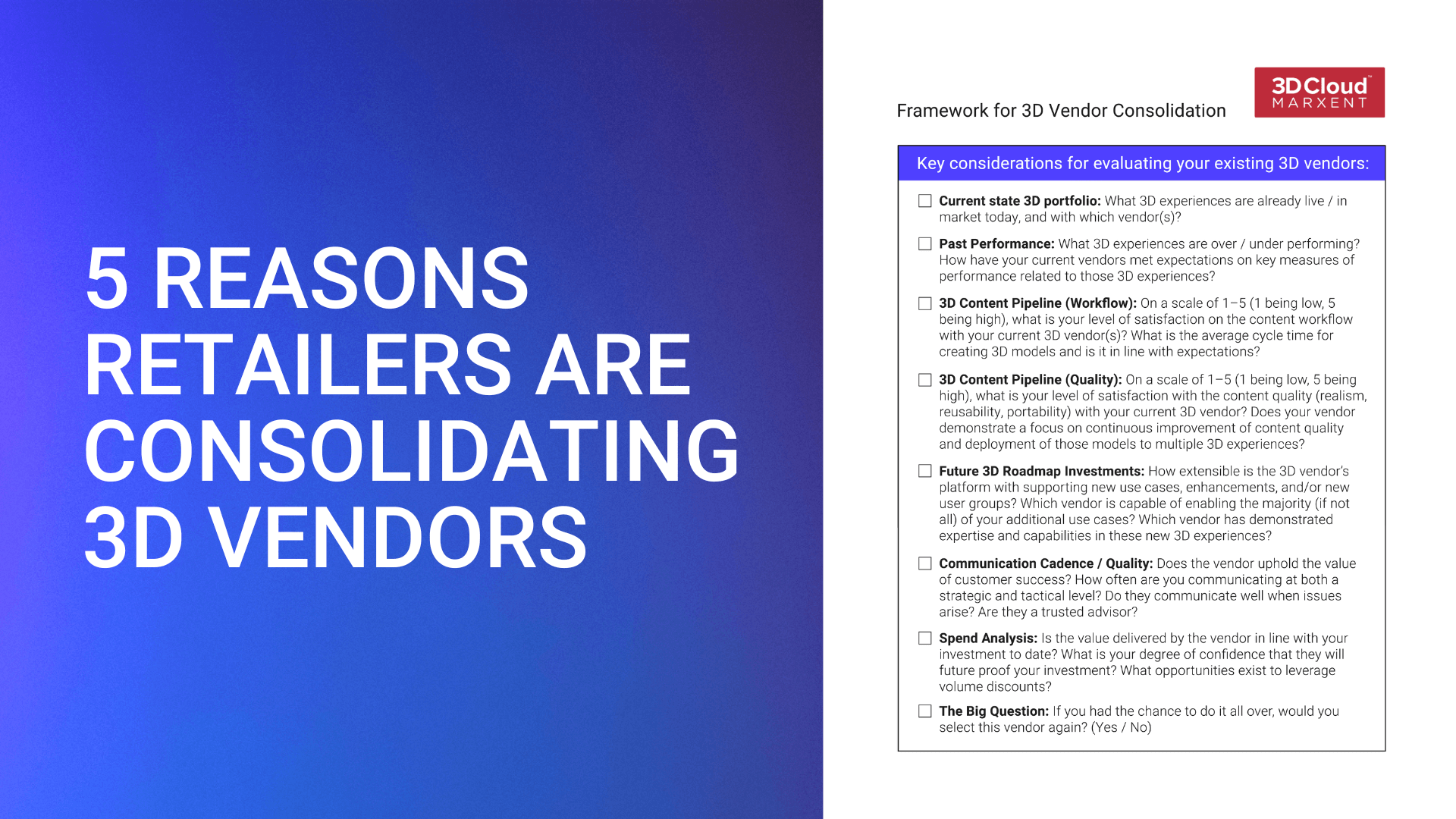

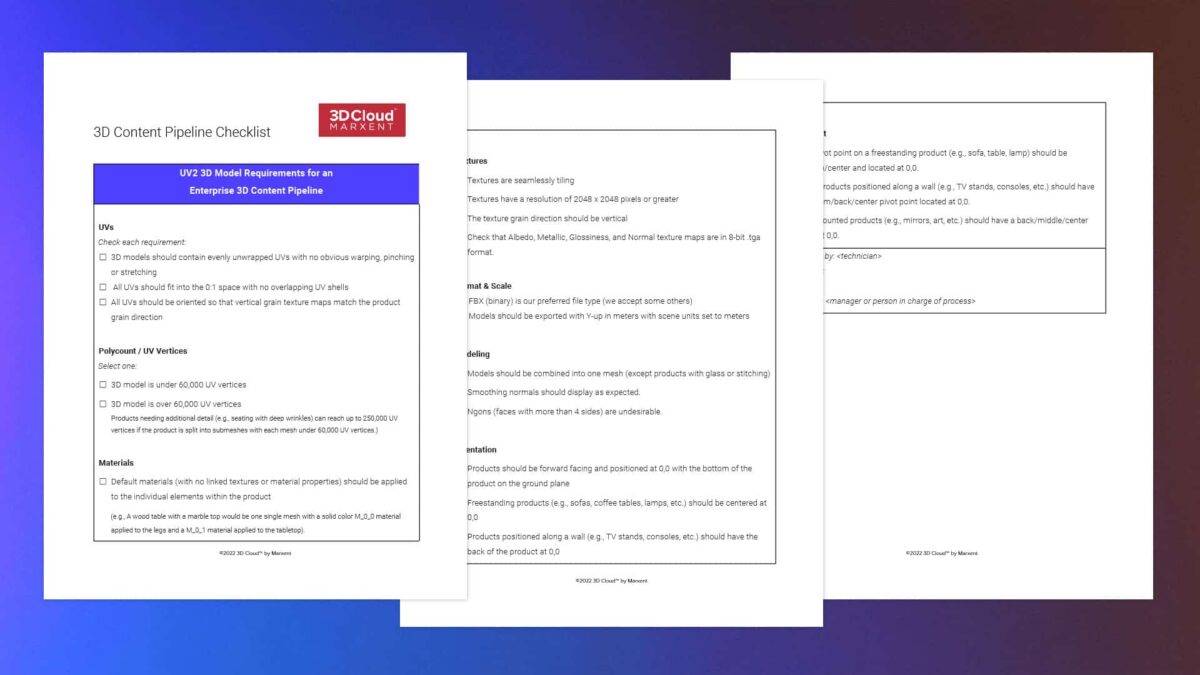

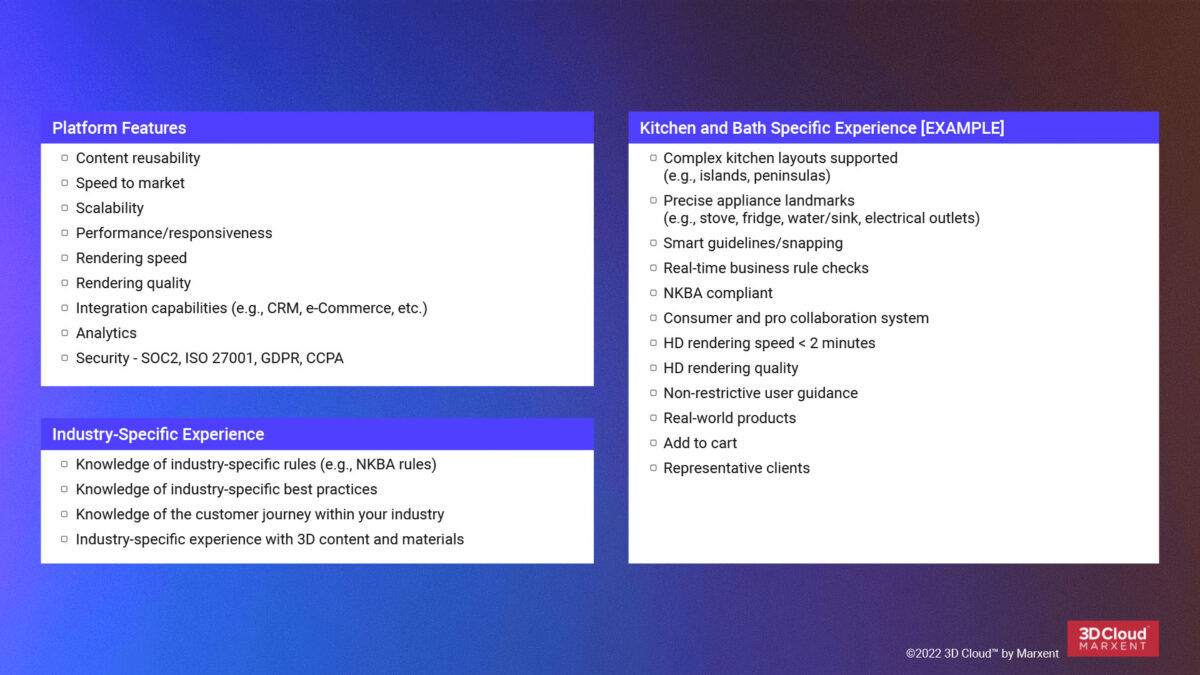

If you are a 3D, IT, e-commerce, merchandising or procurement lead responsible for vendor management, this article is for you.

As your 3D ...

What is a USD file?

A USD, or Universal Scene Description, file is a 3D asset format and much more. USD provides a framework for artists to ...

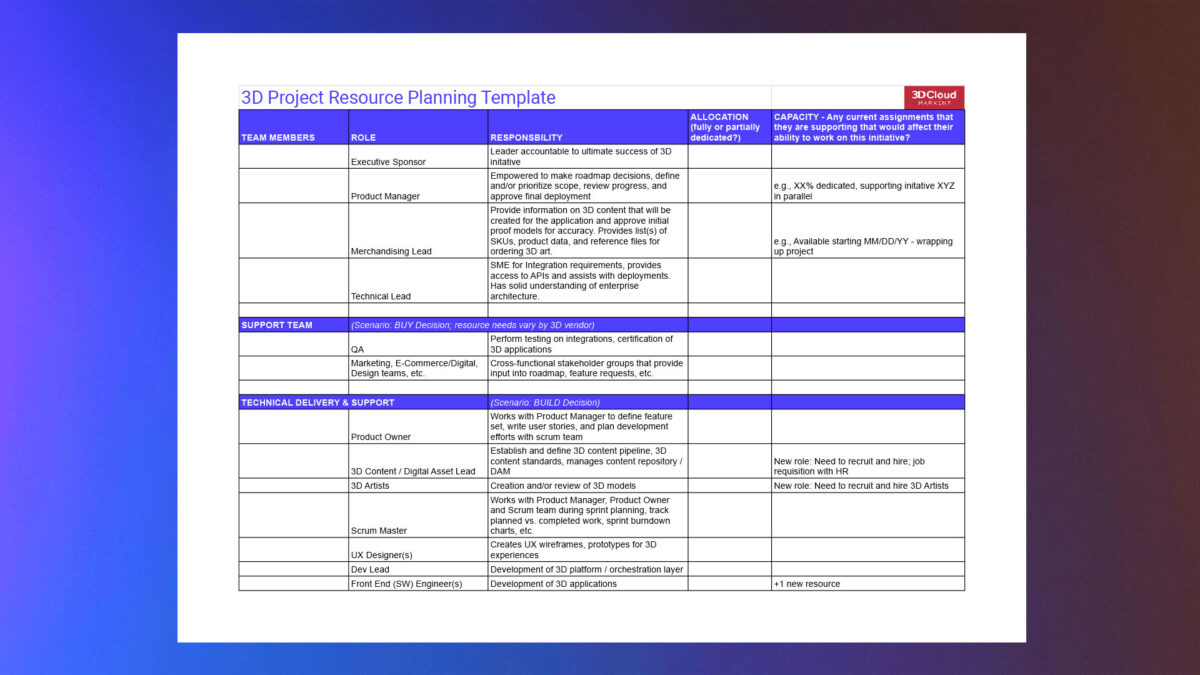

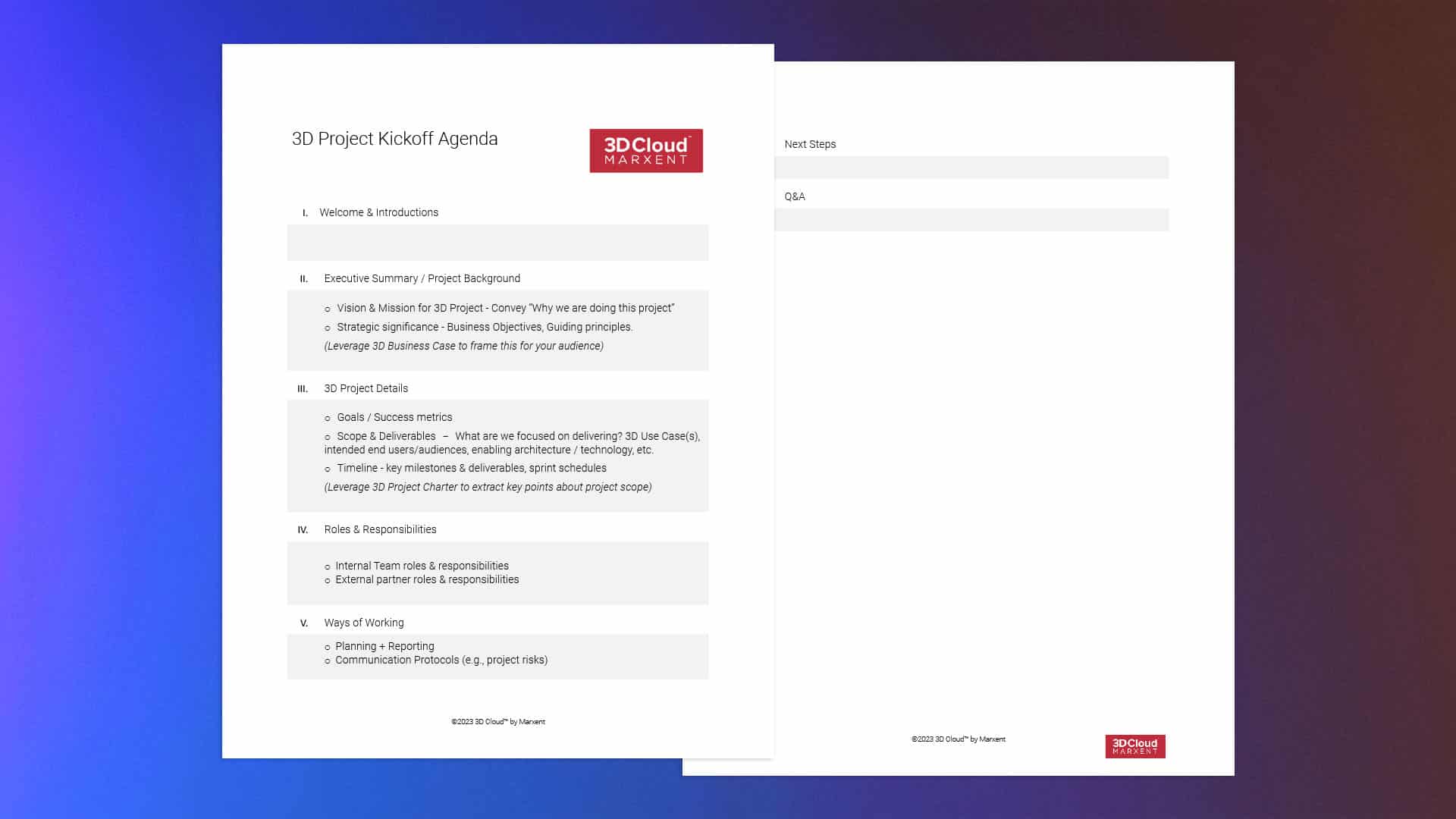

If you are a product, technology, marketing, digital innovation or e-commerce professional responsible for launching a new 3D initiative, this article is for you.

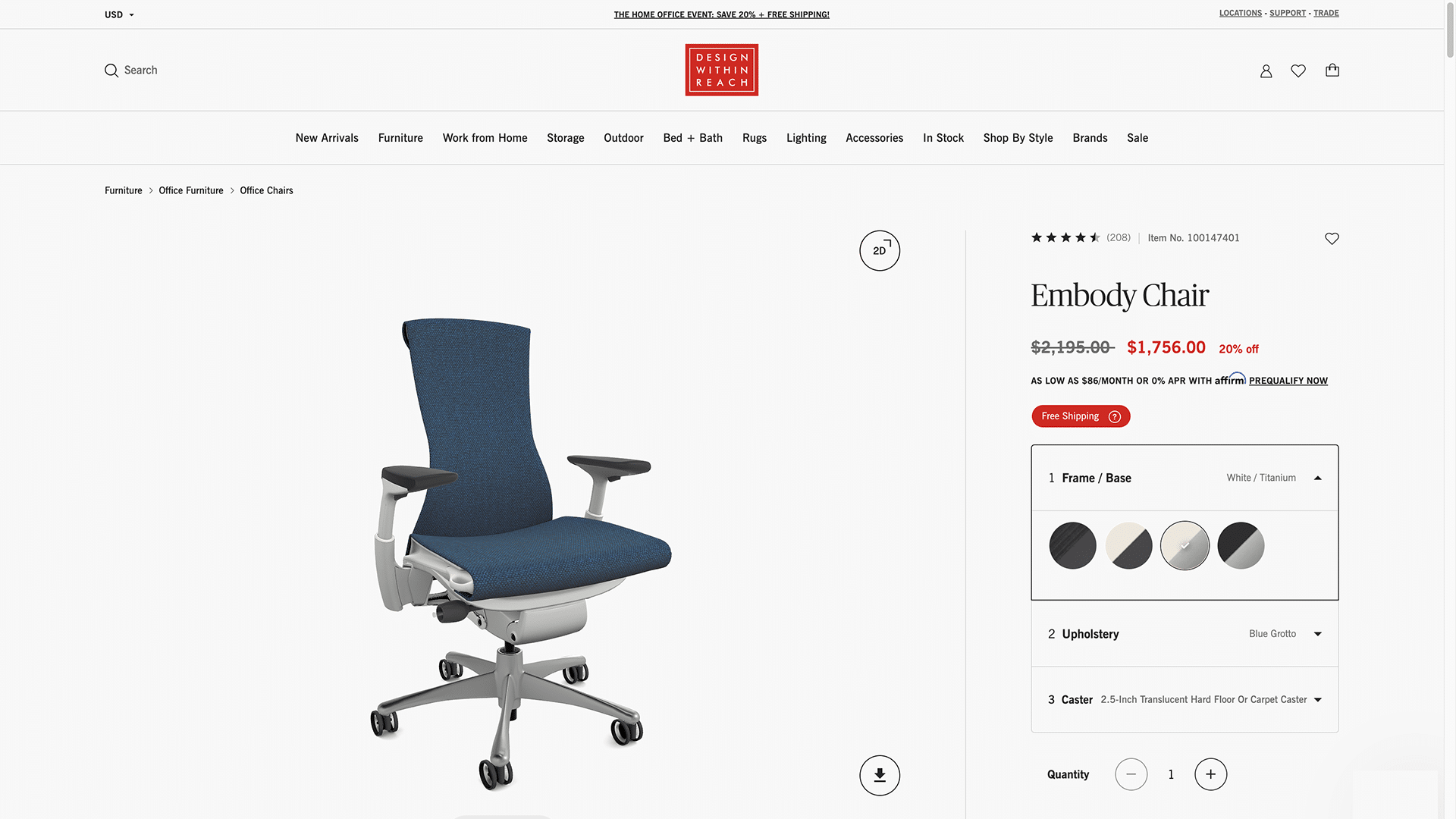

Here are 6 ways that interior design teams from major retailers like La-Z-Boy, Joybird, and Design Within Reach are using 3D to improve the in-house interior design experience.

From the Metaverse to AR everywhere, 2023 is bringing a new degree of maturity to 3D -- especially in furniture and home improvement. Here’s who’s using it, how 3D is delivering value, and where things are headed.

What is a STEP or STP file?

A STEP file is a commonly used standard computer-aided design (CAD) file format. STEP files, also called STP files, ...

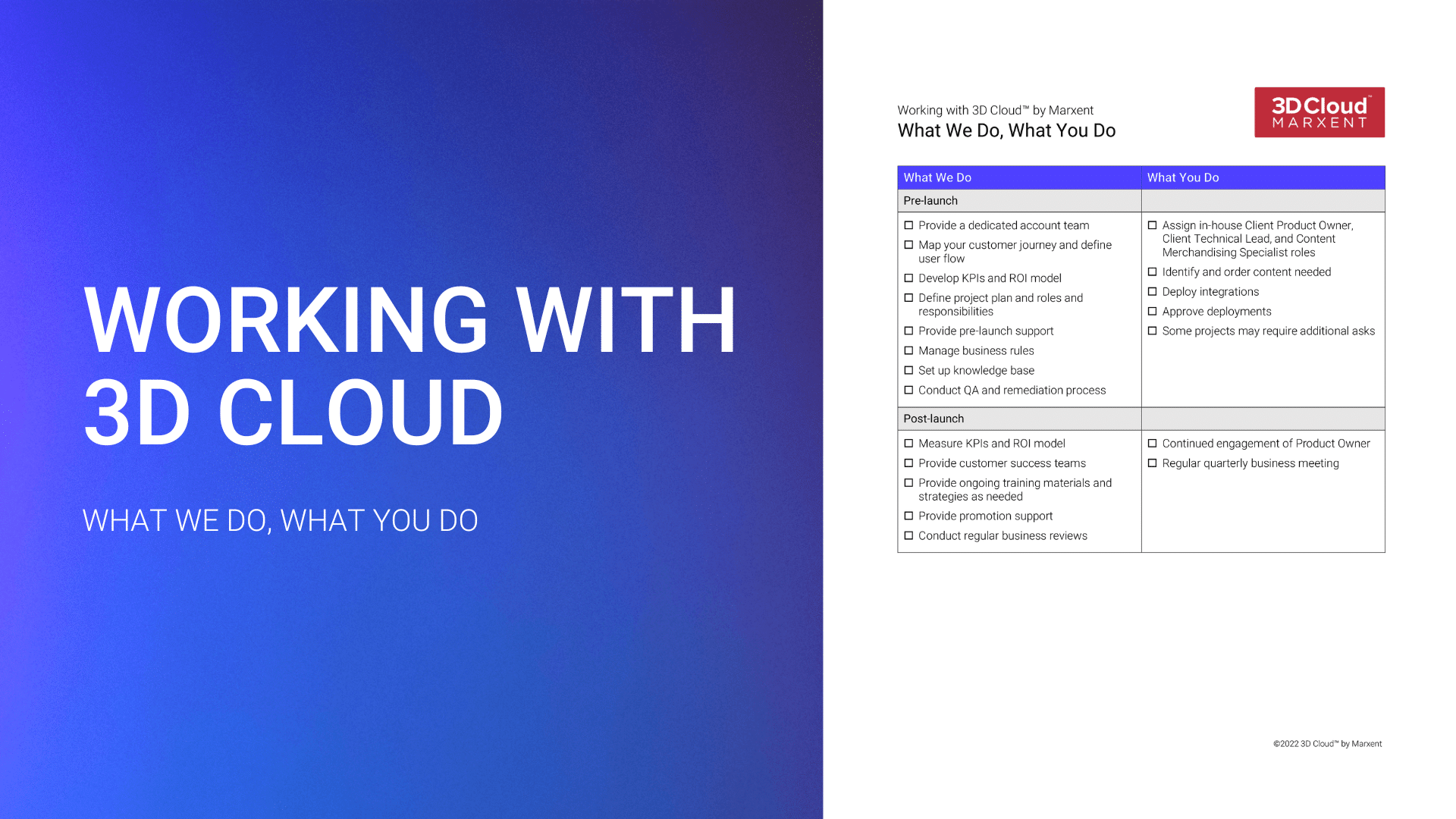

If you are a retail, e-commerce, technology, marketing, product management or project management leader working on a strategic 3D initiative, this is for you.

If you are a retail, e-commerce, technology, marketing, product management or project management leader working on a strategic 3D initiative, this article is for you.

Learn about WebAR and how it can level up your digital content. Plus, benefits, examples, and how to get started.

How ecommerce photography can help increase sales, what you need to take photos yourself, and questions to ask if you want to hire a pro.

Explore the basics of 360 product photography. This guide covers the equipment, how to take the photos and how they can help your sales.

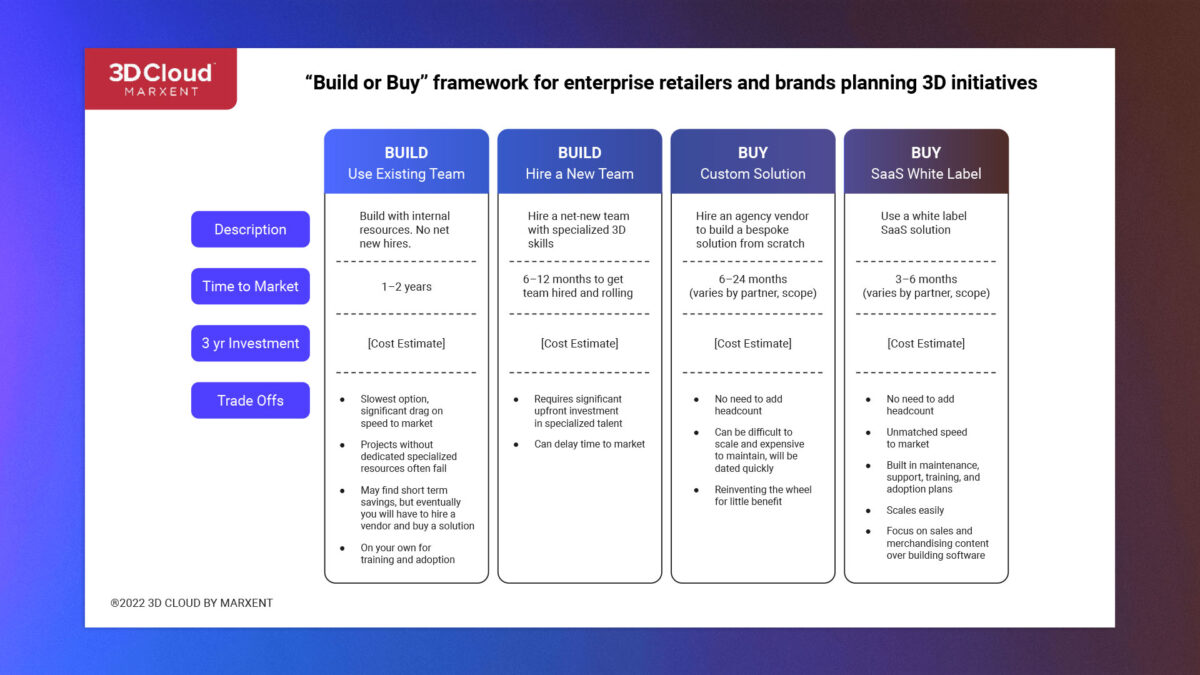

Build or buy: Why retailers choose to outsource 3D projects - or not.

Learn by example how different industries use WebAR technology to level up digital content and the customer experience.

The metaverse will usher in a new era of immersive content supported by a robust creator economy. Learn how to create now to engage consumers today & tomorrow.

To design a successful 3D initiative or enterprise-wide 3D strategy, you need to first understand 3D content. A solid understanding of 3D ...

The metaverse offers opportunities for brands that adopt Web3 habits. Learn what it takes to make it the core of your revenue from examples and pro tips.

Congratulations to our client, Kinsman, for being our November 2022 Partner Innovation Spotlight!Kinsman, a leading Australian manufacturer, ...

2022 YEAR IN REVIEW1. Platform Before Apps: For larger retailers and manufacturers, investing in apps has become secondary to investing in ...

Stand out and publish fresh visual merchandising content daily -- without needing to stage an expensive photoshoot. Sounds good, right? Today’s ...

Congratulations to our partner, PlaceMakers, for being recognized as our Partner Innovation Spotlight. PlaceMakers is New Zealand’s leading supplier of building materials and hardware.

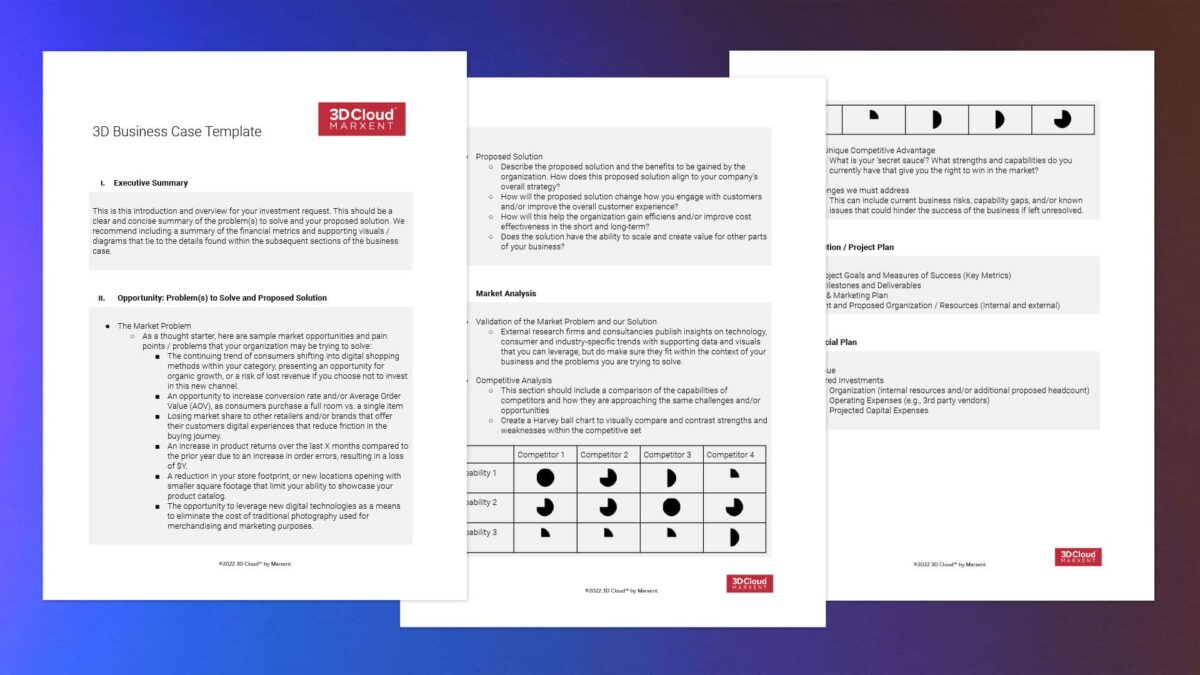

In a sea of other initiatives vying for dollars and resources, a compelling business case is the key to securing funding for new 3D ...

We would like to congratulate our client, Jerome's, for being our September Partner Innovation Spotlight!

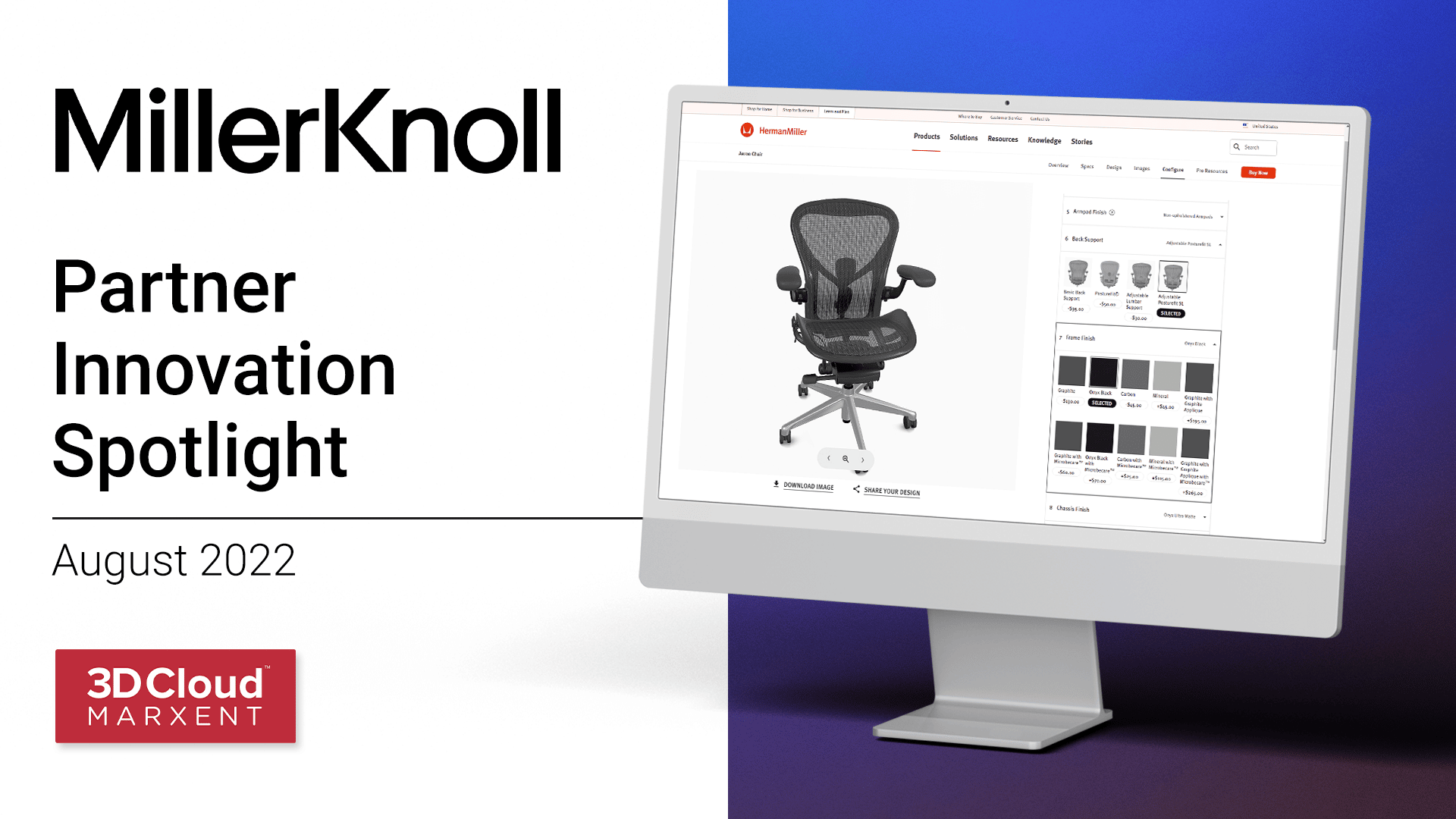

Congrats to our partner, MillerKnoll, on the successful launch of their new, code-free system for building and maintaining 3D product configurators powered by 3D Cloud™ by Marxent.

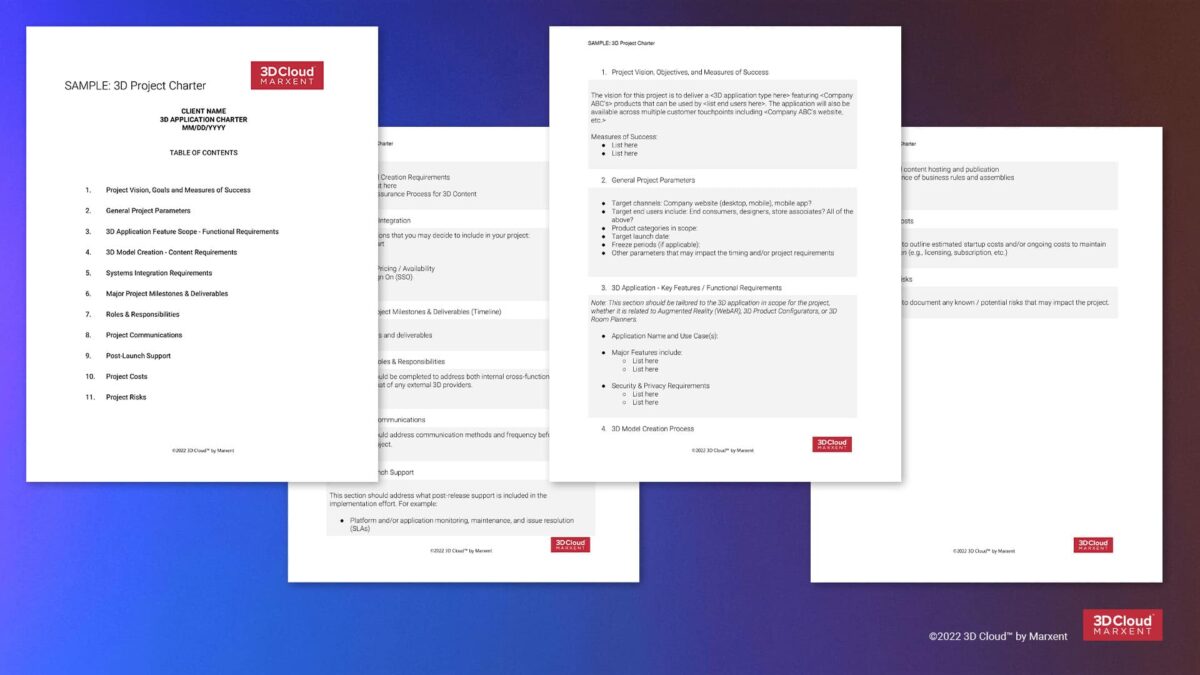

Utilizing our many years of experience in running 3D projects, we’ve created this free 3D project charter template to make it easy for you to get started. Download today.

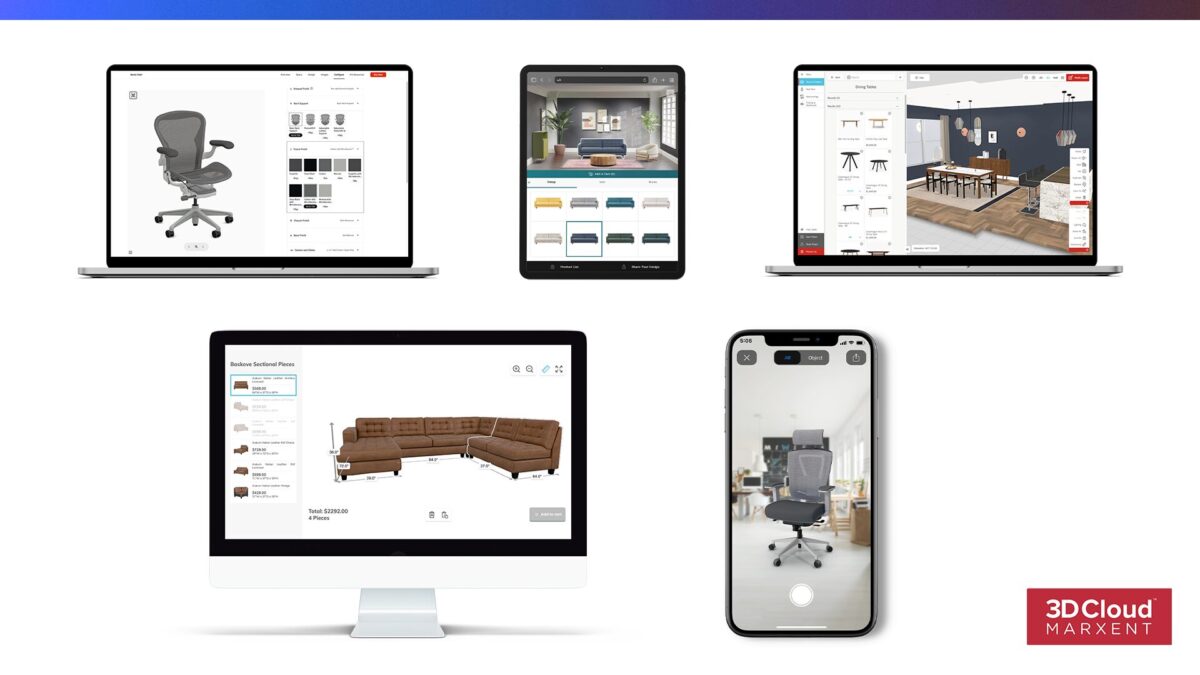

Augmented RealityConsumer Problem: I want to check products for fit and look before I make a purchase.

Retailer Problem: I want to offer a ...

We make 3D easy for enterprise retailers and manufacturers. Fast, scalable, secure, proven, and with a single platform for every 3D commerce journey, 3D Cloud™ by Marxent does all of the heavy lifting so enterprises can launch new 3D initiatives quickly without hiring a specialized team.

If you've been tasked with launching a 3D initiative but are just getting started with 3D, this guide to 3D vocabulary provides a fast and easy ...

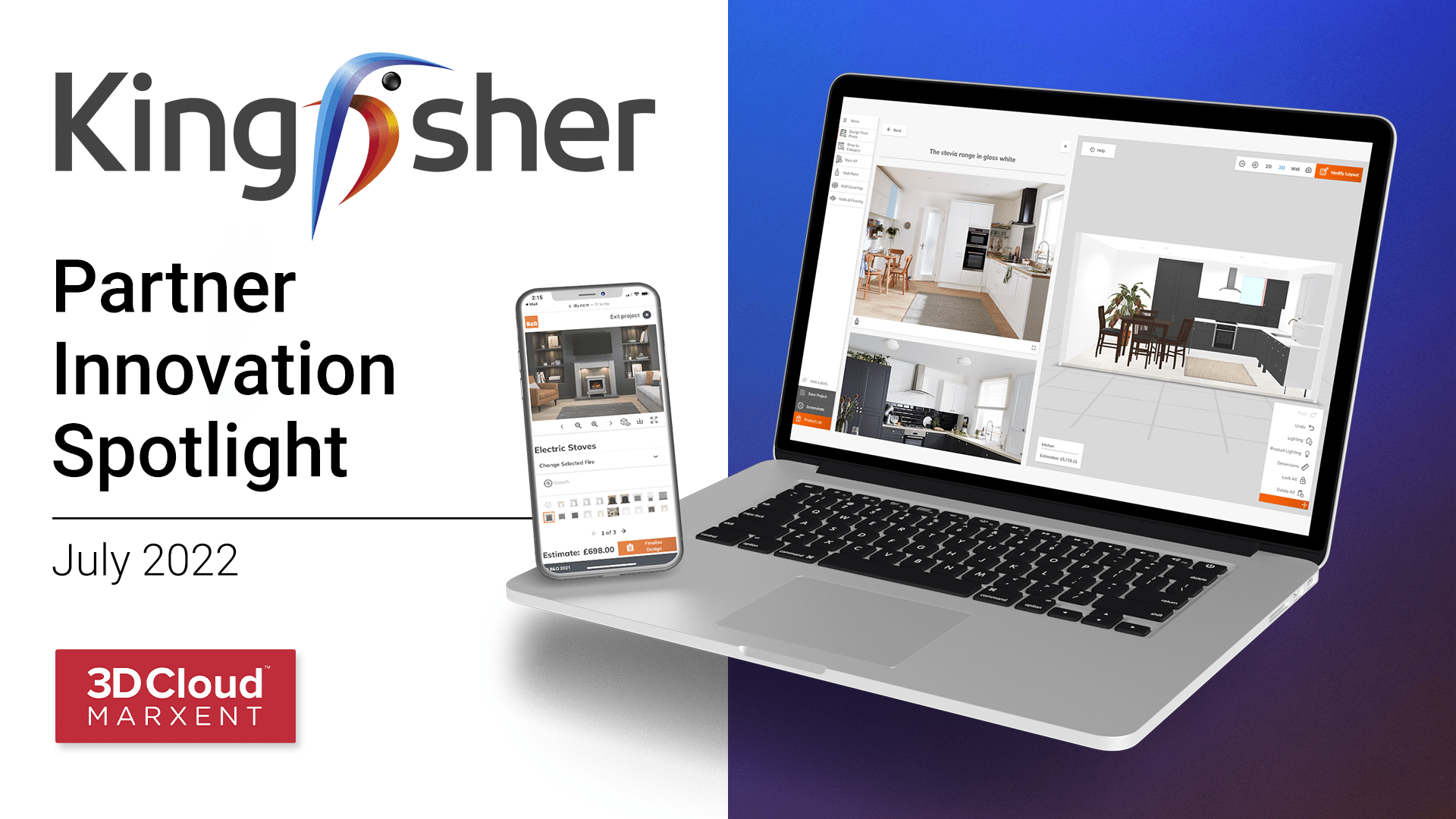

Congrats to our partner, Kingfisher, on the successful launch of their new 3D visualisation, planning, and design tool powered by 3D Cloud™ by Marxent.

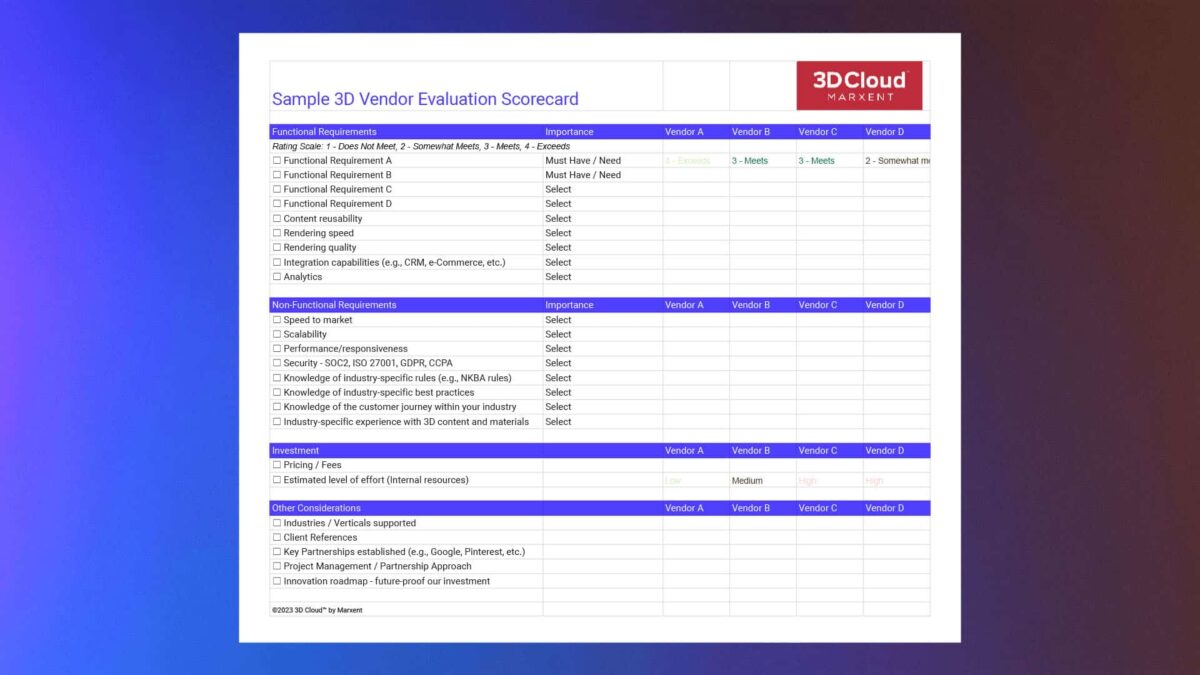

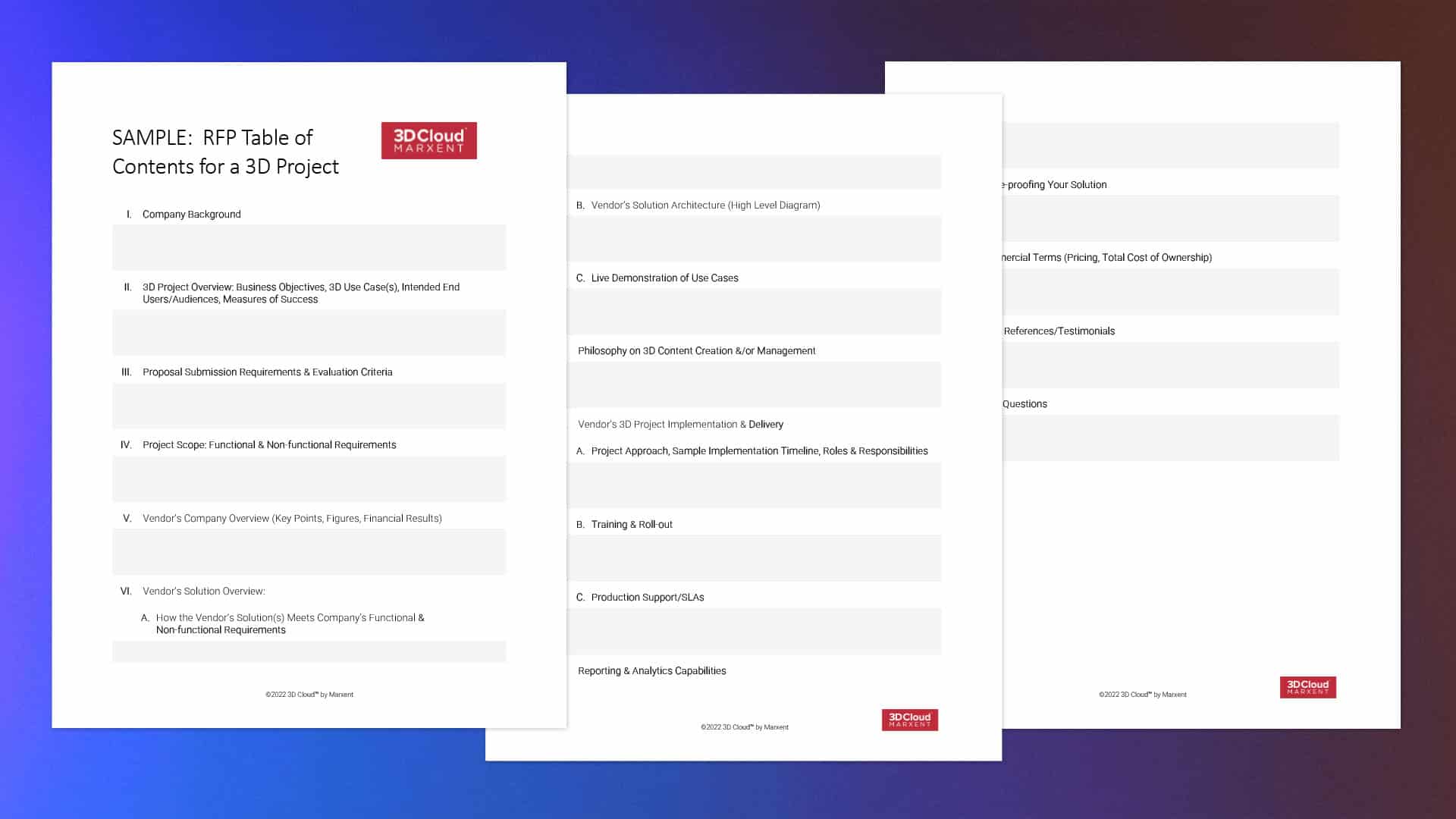

Accelerate your 3D Partner evaluation process and increase your confidence in vendor selection with a time saving resource.

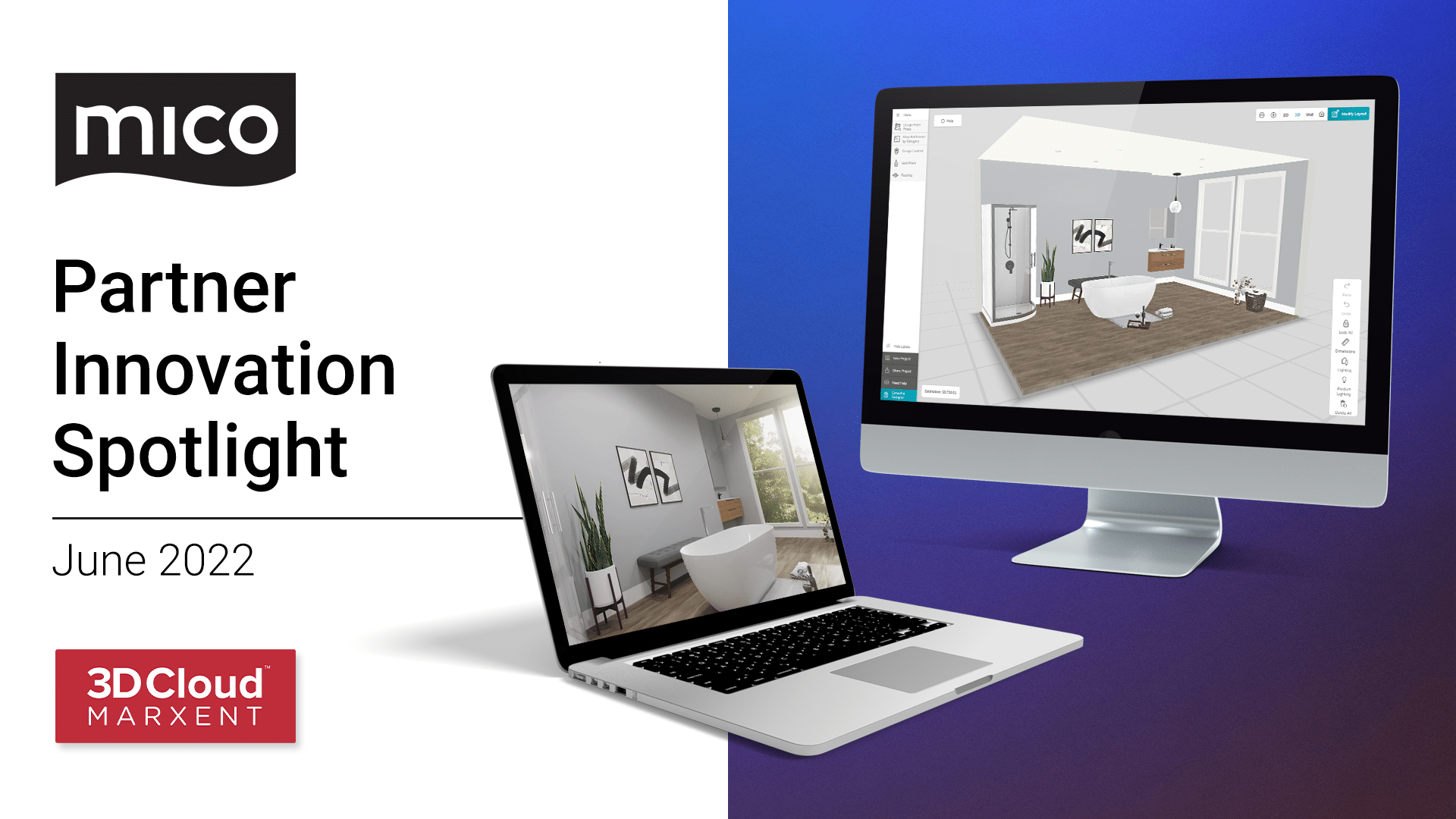

Congrats to our partner, Mico, on the successful launch of their Bathroom Design Planner powered by 3D Cloud™ by Marxent.

What Is the Metaverse?

The metaverse is a network of immersive digital worlds. People socialize, work, and play there, parallel to our physical ...

Choosing the right vendor for your vertical is essential to success. Learn more about why industry expertise matters in 3D. Includes downloadable checklists and the most important considerations for choosing your 3D vendor.

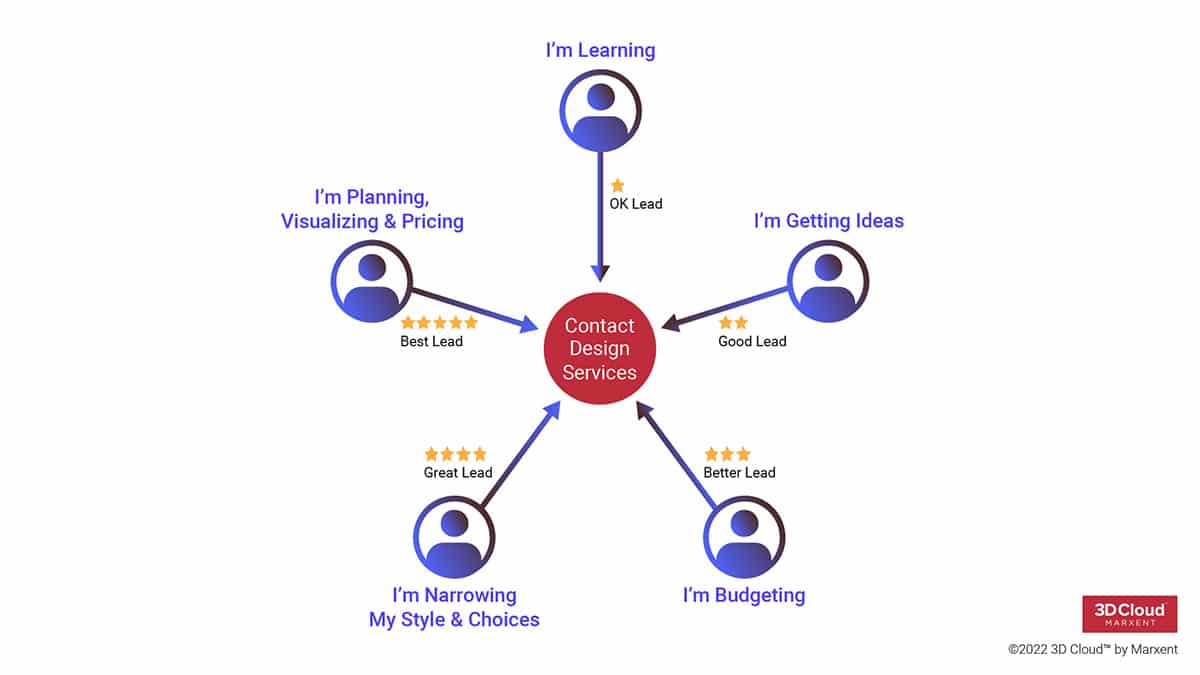

Generate higher quality virtual design services leads by delivering the right application at the right time to the right buyer and building a comprehensive profile of buyer signals for each lead.

Learn the basics of 3D photography and discover methods for creating 3D visuals to promote your e-commerce business.

What steps did you take to reach your level of success?I first realized I wanted to get into software development, particularly that ...

What steps did you take to reach your level of success?I have a few things that I've tried to do consistently since I joined the emerging ...

What steps did you take to reach your level of success?I’ve always been a very curious person, and have attempted to seek out knowledge and ...

Join Marxent and learn more about the 5 AR/VR Trends you will see in 2022!

What Is a Product Configurator?

A product configurator is software that allows people to customize an item and see how it appears. The ...

2021: The Summer of 3D

As with eCommerce as a whole, social media has become a key location for retail inspiration and purchases during ...

3D rendering is everywhere: real estate, online shopping, gaming, movies and more. Learn about the art and science of 3D visualizations from ...

Many different industries use 3D rendering to help people visualize realistic objects. We share examples of 3D rendering and how verticals use ...

The Marxent 3D Room Planner with Design from Photo is the 3D Room Planner of choice for the top furniture and home improvement ...

The Marxent 3D Room Planner with Design from Photo is a great way to get customers engaged with Room Commerce® - buying whole rooms of ...

About Tony Mitchell, Head of Global Logistics and Digital Innovator for American Furniture WarehouseTony Mitchell is the Director of Global ...

About Scott Perry, 3D PioneerScott Perry is a digital home goods pioneer credited with the first launch of Augmented Reality in retail. And ...

We have years of experience working with the glTF format in our 3D solutions. We'll share resources for working with glTF files, organizations ...

We use our years of experience helping developers expedite their work with 3D files to explain how to use GLB files, it's relation to glTF, and ...

The most popular 3D file formats for 3D commerce

The number of 3D file formats continues to grow as 3D commerce matures. Five most popular ...

3D commerce is becoming the preferred online shopping option for people around the world. Get the latest statistics to understand why 3D technology is a must-have to increase engagement and convert browsers into buyers. 3D sample videos & a free 3D commerce platform checklist included.

We have years of experience helping developers expedite their work with 3D files. In this guide, we explain how to use FBX files, how to export ...

We have years of experience with all 3D formats and helping developers expedite their work with AR. In this guide, we explain how to use OBJ ...

What is the USDZ file format?USDZ is a 3D file format that displays 3D and AR content on iOS devices without having to download special ...

Ultimate Guide to Comparing Top 3D Room Planners

Professional and amateur designers save time and money by pre-planning a space. Interior ...

Complete Guide on How to Outsource 3D Modeling Services

Hiring outsourced 3D modeling and rendering services can create a positive impact and ...

NOTE: Welcome to Marxent’s (mostly) annual predictions for the coming year. This isn’t our first video to the prognostication rodeo, and you ...

Expert Tips on Trends, Types of Plans, and 2D and 3D Designs

The right floorplan will make-or-break a kitchen. Below, you’ll find useful advice ...

The Future of Retail: Predictions, Advice, Trends

The future of retail is a hot topic of conversation. New research emerges daily, stories are ...

What are the Best Augmented Reality SDKs?

Augmented Reality SDKs are not a one-size-fits-all proposition. The only way to answer which is the ...

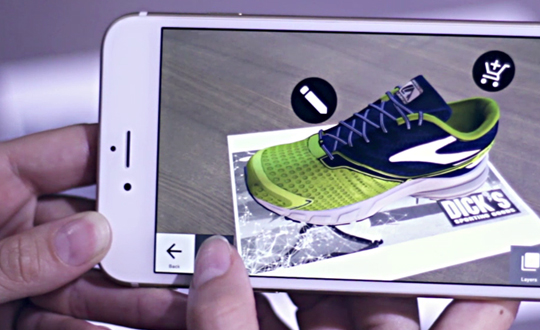

Video recap of 10 best Augmented Reality retail mobile apps of 2019

Looking for an overview of top AR apps in retail? Watch this quick video ...

By now you've heard of "New Retail," defined by Alibaba founder Jack Ma as a seamless merger of offline, online and logistics for a dynamic new ...

NOTE: Welcome to Marxent's (almost) annual predictions for the coming year. This isn't the first time the Marxent team has climbed out on the ...

Macy's CEO Jeff Gennette paid a visit to Recode's Code Commerce event for a Q&A session with CNBC reporter Courtney Reagan. The lively ...

Macy's monthly video series, Beyond the Boardroom, features Chairman and CEO Jeff Gennette getting out into Macy's stores to check out the ...

Why Millennial Furniture Shopping Trends Matter

Did you know that Millennials spend $600 billion annually, and are forecasted to be 35% of ...

Meet the Millennials: 80 million Americans born between 1982 and 1996 who are now upending the way we do just about everything. Though often ...

How much faster is Apple's ARKit apps when paired with Marxent's MxT Tracking? See for yourself:To learn more about Marxent's Augmented ...

By now you’ve heard that furniture customers are “in person shoppers.” It’s one of the foundational myths of the business. As the story goes, ...

Mozilla, the not-for-profit developer community behind the popular Firefox Web browser, is trying its hand at developing a new product ...

Retail is changing. Online sales are growing, and brick and mortar retailers are adopting new strategies to succeed in a complicated ...

Realism, speed, deployment options — furniture retailers and manufacturers pursuing a 3D product strategy want them all, but at what ...

What does Amazon know that you don’t? It’s a question furniture retailers and manufacturers have to ask themselves in today’s rapidly evolving ...

Apple's ARKit SDK has been a big hit among developers, including those here at Marxent. The software is stabile, well-designed, and makes it ...

For more information on Augmented and Virtual Reality solutions, check out our 3D Cloud™ pages, which explain ways businesses are harnessing the ...

Furniture retailers are getting into the holiday spirit

Augmented Reality and Virtual Reality continue to disrupt traditional retail. With the ...

The Augmented Reality revolution is here!

It’s a brave new world for retailers scrambling to keep up with the pace of change. Augmented Reality ...

What's the ARKit fanfare all about?

The launch of Apple's ARKit software, which makes it easier for developers to create AR applications that ...

Google's ARCore: Markerless Augmented Reality for the masses

Google upended the Augmented Reality landscape this week with the announcement of ...

We've been testing ARKit here at Marxent, and the SDK provides a stable and surprisingly robust solution for building AR-enabled apps for Apple devices.

Want to be a Augmented and Virtual Reality retail innovator?

You need a plan.

Successfully implementing Augmented and Virtual Reality retail ...

With ARKit, Ikea and Apple beat Amazon to the punch — and turned AR into a true retail strategy

Apple’s Worldwide Developers Conference keynote ...

For the NFL, Augmented Reality and Virtual Reality are in the gameIf you’re reading this, it’s safe to assume that you are, indeed, ready ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

Note: For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the ...

Editor's Note: This is our first "Deep Dive," a column that will combine available press coverage with the insight of industry insiders to offer ...

For a rundown of the biggest developments in Virtual Reality and Augmented Reality from the past 12 months, check out our 2016 ...

Will Markerless Augmented Reality SLAM Tango?

Let's cut the jargon!

We get a lot of questions about the nuances of Augmented Reality (AR). ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

HTC Vive: What is it and what can it do for your business?"HTC is working with developers to foster the creation of content that spans ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

There's an old saying that reality is what you make of it. That's more true than ever for 3D artist or designer, who are now working in at least ...

Want realism? Better have the right lighting.

Today I'm going to talking about achieving realism in 3D images through lighting, but first a ...

Furniture retail is ripe for disruption. What has Amazon been waiting for?

Amazon is a disruption company first and foremost — and word has it ...

What if a computer could actually see the world? Project Tango makes it possible

Google (or Alphabet Inc, if you mind the details) is always ...

Mixed Reality (MR) uses software and hardware that interacts with your voice and gestures to co-mingle physical and digital worlds. We’ve been ...

For a rundown of the biggest developments in Virtual Reality and Augmented Reality from the past 12 months, check out our 2016 ...

Unified commerce — customer experience is the key

Today’s shoppers interact with their favorite retailers in a variety of ways. They may go to ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

3D modeling has been around for decades, with pioneering work in the field extending back to the 1970s. From those primitive beginnings, we've ...

The retail industry has entered a period of rapid change

Technological innovation is remaking all aspects of the retail experience. Partially ...

What are immersive virtual environments?

Immersion. Immersion. Say it with me, "Immersion!"This word gets thrown around a lot, particularly ...

2017 is yesterday’s news! Check out our top Virtual Reality and Augmented Reality technology trends for 2019 and see what’s in store for the ...

For a look ahead, check out the 5 top Virtual Reality and Augmented Reality technology trends for 2017. And for more information on how ...

What to look for in a Virtual Reality company

You have a great Virtual Reality concept and you've gotten approval to move on it. Your next ...

Experience Virtual Reality and the Lowe's Holoroom at IBS 2016

See how Virtual Reality is changing the building products landscape

At IBS 2016, ...

#SaveTheSamples. It's just the right thing to do.

Another year has come and gone. As Thanksgiving approaches, followed by Christmas and New ...

2016 is yesterday’s news! Check out our top Virtual Reality and Augmented Reality technology trends for 2019 and see what’s in store for the ...

At SXSW, Virtual Reality is taking over

South by Southwest makes or breaks tech trends. The international conference in Austin, Texas has ...

What makes an immersive Virtual Reality experience believable?

Virtual Reality lets players teleport into outer space, make friends with a ...

How Virtual Reality works: The ins and outs of VR technology

In my previous article, What is Virtual Reality?, I examined the “what” and “who” ...

What are the best Virtual Reality devices?

Oculus Rift, Project Morpheus and Vive are just a few of the upcoming technologies for Virtual ...

Virtual Reality that puts humans first

From who we are to how we build Virtual Reality and Augmented Reality solutions, Marxent's team of 50+ ...

Learn the latest about how VR works, the best technologies in 2021 and real-life examples of VR use cases in more than 25 industries.

Why did Apple acquire Metaio?

This week, Apple added Augmented Reality company Metaio to its portfolio. The news broke when a document surfaced ...

Who is creating the best Virtual Reality marketing experiences?

Virtual Reality is emerging as a powerful marketing tool, and several ...

SXSWi 2015 was bubbling with the ultra cool. From the Aether Cone thinking music player and the Guide Dots app for the vision impaired to the ...

SXSW is almost here, and the all-new Marxent Holodeck™ Virtual Reality experience is ready to be unveiled. All the final touches are in place, ...

What is "visual commerce?"

Over the past few years, the notion of "visual commerce" has been defined and redefined. Visual commerce has been ...

SXSW 2015 Virtual Reality: Have fun on the Marxent Holodeck™

If you're headed to SXSW 2015 and are looking for Virtual Reality exhibitors and ...

Looking for the next big ideas in top Augmented Reality? Then Kickstarter is a place you need to visit. Kickstarter has been home to really ...

The technology and fashion worlds are blending, not only because it is cool, but because how our lives are becoming ever more tethered to the ...

Let’s be honest. All of us nerds (including the closet nerds) have dreamt of a virtual fantasy world in which we can participate in otherworldly ...

We're stuck in the future: The top 5 Augmented Reality trends for 2015

It's that time of year again! We just published our ...

UPDATE: What are the top Virtual Reality and Augmented Reality trends for 2016?

A lot has changed since we originally published this article in ...

Our Top Augmented Reality and Virtual Reality games ranking has been going strong since 2014. We periodically update this post with the latest ...

For more information on how retailers are using mixed commerce solutions, check out our Mixed Commerce Glossary, which defines the terminology ...

As Augmented Reality retail technology evolves, new possibilities for mobile retail applications are emerging. Retailers are increasingly using ...

Augmented Reality (AR) experiences often depend on 3D models and the contributions of specialized 3D artists. Marxent has an entire team of 3D ...

Welcome to Marxent's Augmented Reality resource guide. If you're in the process of learning more about top Augmented Reality apps, technologies ...

The mobile AR landscape is diverse and can be confusing. While some top Augmented Reality companies provide a range of products, services and ...

Augmented Reality is an emerging technology and has it's own lingo. This Augmented Reality glossary should help to answer the question "What is ...

There are “AR apps” and then there are apps that truly Augment Reality. The AR apps that truly augment reality deliver immersive, magical, ...

Markerless Augmented Reality (AR) is the preferred image recognition method for AR applications. Learn how it works, the advantages of ...

2014 was a great year for Augmented Reality

If you're interested in the past, keep reading. However, if you're looking for a more current ...

Hexagon Solutions [HEXAb.ST] decided to engage investors with Augmented Reality in their most recent annual report. Instead of allowing the AR ...

The Dayton Business Journal and Dayton Development Coalition have announced the winners of the Innovation Index Awards and will present the ...

SanDisk strives to demonstrate their culture of innovation and with high-tech, show-stopping interactive displays and live event experiences. ...

Here's the lowdown on emerging trends in Augmented Reality this spring, featuring some of Marxent's latest projects. Curious about how these ...

These Augmented Reality CES 2014 concepts reveal much about what's ahead in Augmented Reality for consumer engagement. There is so much cool, so ...

The very first Augmented Reality Valentine's Day cards + AR games for kids are here. Instead of just giving classmates flimsy paper cards, with ...

We get a lot of requests for Augmented Reality t-shirts. From trade shows to concerts, marketers are looking to Augmented Reality t-shirts to ...

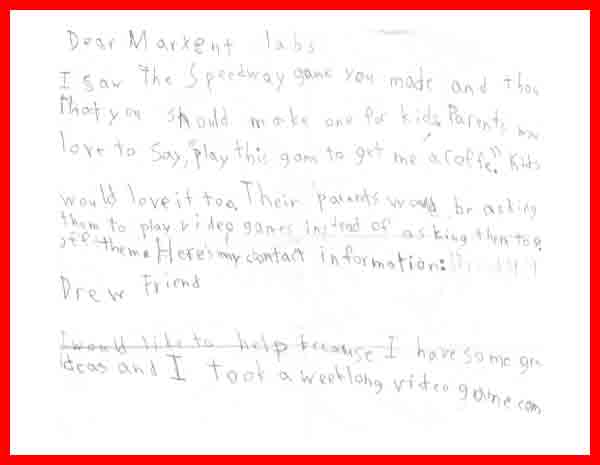

This week we enjoyed hosting Drew Friend, a local 10-year-old video game developer, for lunch and demos in our Dayton office. Drew came and ...

Qualcomm Vuforia AR offers amazing technology that is set to change creative digital landscape. Enabling experiences such as text recognition, ...

Looking for Augmented Reality marketing ideas and trends? You've found the perfect resource. Sign up for VisualCommerce: Augmented Reality ...

For a rundown of the biggest developments in Virtual Reality and Augmented Reality from the past 12 months, check out our 2016 ...

With the introduction of wearable Virtual Reality/Augmented Reality devices such as Oculus Rift and Google Glass, developers and designers will ...

Recently I was considering a visit to Discovery Cove, a local aquatic theme park where visitors can swim with dolphins. Between work and working ...

What could be more perfect for a roomful of budding scientists than an Augmented Reality 3-D animated tour of the human brain? Absolutely ...

Marxent Labs is an Augmented Reality agency specializing in AR apps for live events, marketing campaigns and scalable AR platforms for the ...

If you're considering Augmented Reality, for a marketing campaign, live event display, POS or business tool then you've found the perfect AR ...

Wearable computers are hilarious and who better to capture the humor than Fred Armisen on Saturday Night Live. As the technology is perfected, ...

-- UPDATED - April 30, 2014--The USPS Augmented Reality direct mail promotion has been renewed for August/September 2014. Most Augmented ...

We had a great time at NRF Retail's Big Show 2013. Beck Besecker, Marxent's CEO and co-founder participated in this panel dedicated to ...

“Augmented Reality will be one of the most innovative attractions for promoting our message.”Jackie Catalano, Emerson Industrial ...

The number one reason for retailers to invest in mobile applications and experiences is customer demand with loyalty a close second - and a ...

VisualCommerce® knows retail, and so does New York City. We've been too busy developing mobile apps and enterprise web services to hit Times ...

It's been a fun year at Marxent Labs, chock full of creativity and innovation. One invention that caught the eye of the Edison Awards™ is the ...

In conjunction with Cornerstone Brands and Ballard Designs, Marxent Labs is pleased to announce the Ballard+ Catalog Companion App, powered by ...

Big things are brewing at Marxent Labs this week. We can't tell you what it is quite yet, but VisualCommerce® is a new software service ...

Augmented Reality glasses have a vast number of useful applications in real-world scenarios. Wearable AR will fundamentally simplify the ...

If you think that Augmented Reality glasses are still a thing of science fiction novels, military movies and gamers, think again. The time for ...

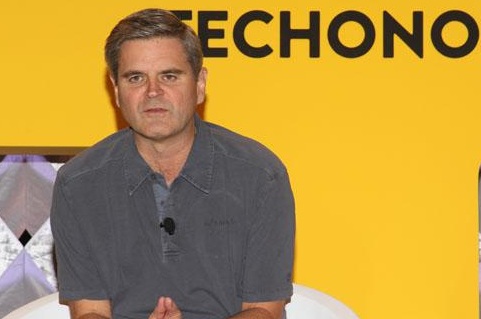

Speaking at Techonomy Detroit today, Steve Case made a clear case for entrepreneurship to be addressed by policy makers. Case, who chairs ...

If you need a mobile app developed for Android or iOS, you should take a close look at your chosen technology partner. Let's face it - it's easy ...

Shoppers love a great holiday sale and the 4th of July is no exception. The retail experience brings holidays to life in so many ways. From ...

Get it while it's hot, hot, hot! Today Moosejaw released the Sweaty & Wet app, an Augmented Reality experience for iOS and Android built by ...

The Marxent "Easter Egg Hunt" is just one example of how we can use gamification of advertising and "virtual button" Augmented Reality ...